Due to the high compression rates and stellar in-memory architecture of SSAS Tabular, most people with smaller models do not experience performance problems (in-spite of employing bad data modeling techniques and inefficient DAX measures). However, as the size of your models increase, you will start to see performance issues creep up, especially if you are not paying attention to data modeling and DAX measures. Last week, I gave a presentation at the PASS Summit 2015 on my experience of building a 150 GB Tabular model in SSAS 2012. During that, I shared my story on how some of the DAX measures with IF statements were causing performance issues and how to work-around that issue by rewriting your DAX measures. During that day, Microsoft also announced that they resolved this issue in SQL 2016, so I thought of demonstrating the issue, workaround and also the fix in SSAS 2016.

Issue in SSAS 2014 (& older versions)

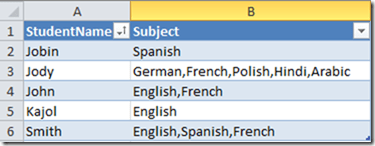

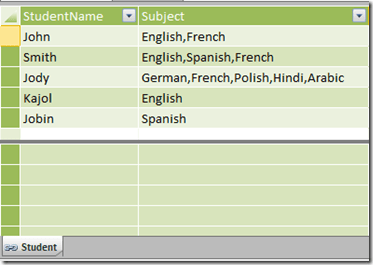

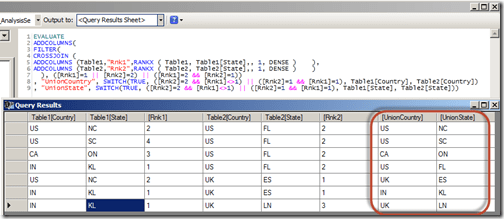

For demonstrating the issue, I will be writing queries against the Adventure Works model in SSAS 2014 and using MDX Studio to show the server timings. Let me start with the below query

WITH MEASURE ‘Date'[test] = If ( 1 = 2, [Internet Total Sales], [Reseller Total Sales] )

SELECT NON EMPTY { [MEASURES].[Test] } ON COLUMNS,

NON EMPTY (

{ [Date].[Calendar Year].Children },

{ [Product].[Product ID].Children },

{ Geography.[Country Region Name].Children } ) ON ROWS

FROM [Model]

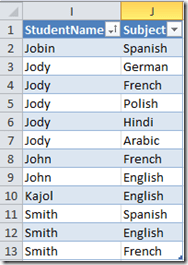

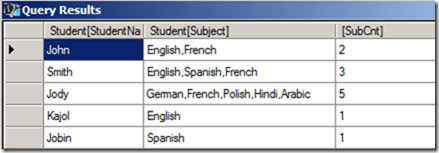

The above MDX query defines a DAX measure called Test, which depending on the condition displays either Internet Total Sales or the Reseller Total Sales (To make it simple, I just made a static condition 1=2 but that can be replaced by any dynamic condition also). The query results should display the Test measure for Year, Product ID and Country. Now, normally we would expect that the Test measure should only execute the true part of the IF statement. But let us execute this in MDX Studio and see what actually happens.

You can see that both the branches of the IF statement are being executed, even though we expect only the true part to be executed. For smaller models, it might not make a difference but for large models with expensive measures, this might cause severe performance issues.

Workaround in SSAS 2014 (& older versions)

The workaround for this issue is to rewrite your DAX such that we ensure that the measures get executed only if the condition is true.

WITH MEASURE ‘Date'[test] = CALCULATE([Internet Total Sales], FILTER(Currency, 1=2)) + CALCULATE( [Reseller Total Sales], FILTER(Currency, 1<>2))

SELECT NON EMPTY{[MEASURES].[Test]} ON COLUMNS,

NON EMPTY({[Date].[Calendar Year].children}, {[Product].[Product ID].children},{Geography.[Country Region Name].children}) ON ROWS

FROM [Model]

Note that the measure has been rewritten as the sum of two CALCULATE functions. The key is to use a table in the filter clause within the CALCULATE that satisfies the below conditions

-

Is related to the fact table of the measure

-

Is low in cardinality (you can also use a low cardinality column instead of a table)

-

Is not being used in the calculations for the measure/condition. If yes, do some extra testing to make sure the performance is not worse

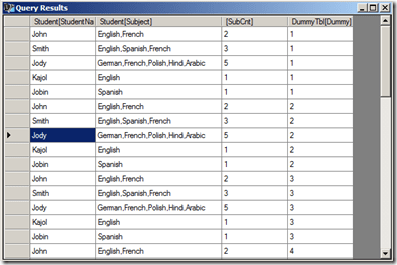

The reasoning behind the table being connected to fact table is because the calculate() with the false condition has to evaluate to zero / BLANK so that the result of the Test measure would only be the one appropriate measure. If the table is not related, you will end up with the sum of both the measures. A low cardinality table or column is preferred because in this technique, you will see that there are 2 additional queries being sent to the storage engine, which evaluates the FILTER part for the two measures. If the tables have high cardinality, the time for the FILTER queries will take more time. The reason why I said that the table or column should not be used in the measure calculations or condition is because I have seen that in certain conditions, this could actually make the performance worse and still execute both the branches. So just make sure you do some extra testing.

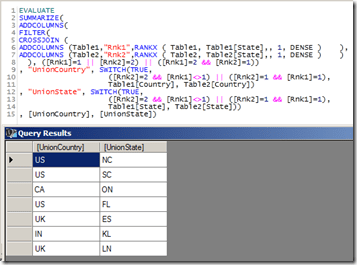

That said, let us look at the scans for the above query. You can see that only the Reseller Sales measure is executed. Also, if you notice carefully, there are 2 extra scans which basically check the filter condition for Currency. In large models, these scans for low cardinality dimensions will be almost negligible and the time for these extra scans will be much lesser than the time taken to execute the other measure also. In this case, the Adventure Works model is just 18 MB, so you won’t see much of a difference.

New Optimization in SSAS 2016

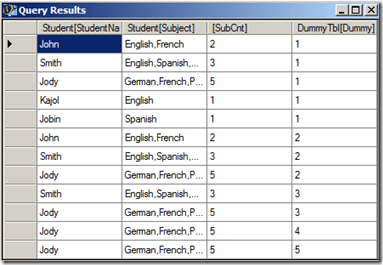

SSAS 2016 CTP2.3 (and newer versions) has a new optimization for this issue – Strict evaluation of IF / SWITCH statements. A branch whose condition is false will no longer result in storage engine queries. Previously, branches were eagerly evaluated but results discarded later on. To prove this, let us execute the original query against SSAS 2016 and see the results.

Now we can see only the relevant measure is being executed. Also, it is faster compared to SSAS 2014 versions of both the original query as well as the workaround. I hope this article will help people who are not on SSAS 2016 to optimize IF statements, and also help understand what the new optimization in SSAS 2016 – Strict evaluation of IF / SWITCH statements actually means. There are also a bunch of new features and optimizations in SSAS 2016 and you should check them out!